InternAloha 2.0 Revamping Scrapers

What is InternAloha?

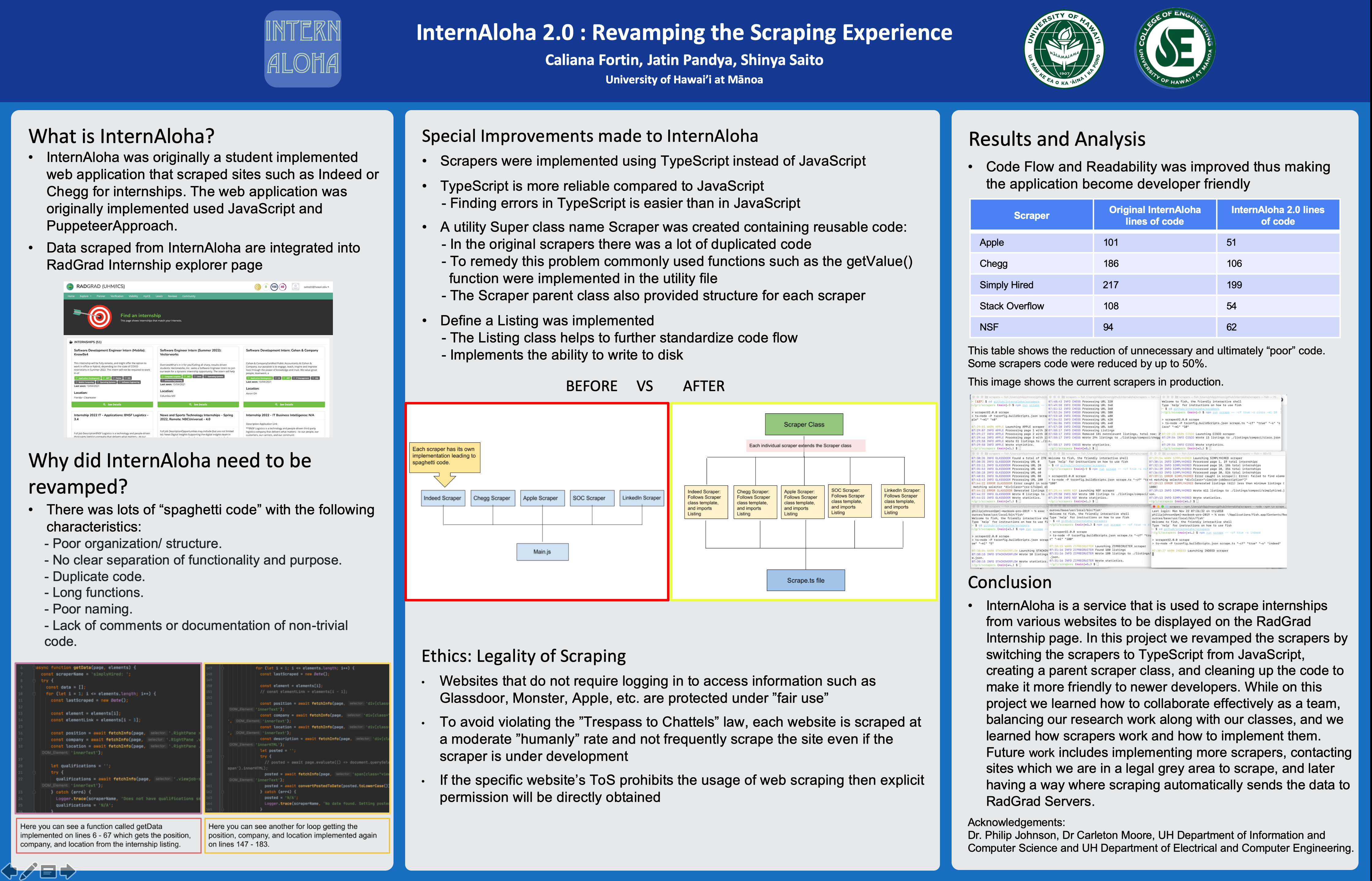

InternAloha is a service that scrapes internships from different job sites and outputs listings in a JSON file. This information is then taken and imported into the RadGrad site in which it is show on the RadGrad Internship Explorer page. On that RadGrad Internship Explorer page the Internships are then filtered by student’s interests and skills they have. This was all implemented in JavaScript and Puppeteer.

Why did we want to update InternAloha?

We wanted to update InternAloha to make improvements to make the code more developer friendly, improve the speed of the scrapers, and remove code that did not make sense. To do this we changed the implementation from JavaScript to TypeScript. This is to make the code more typed. We also used a parent class to handle code that was used amongst different files. We even made an object for Listing and handling a bunch of Listings. The Listing object has fields related to the internships. And the Listings object holds an array of the object Listing and has functions such as checking for the length and writing the Listings to JSON.

Ethics

Legal issues the concerned InternAloha had to do with whether we could scrape certain sites. While researching we found that as long as the information we found was public we had the right to scrape it under “fair use” laws. We also had to stop development on sites that require login’s as the internship listings are not public. We also had to make sure we were not violating “Trespass to Chattels” law by moderating our activity on the sites to avoid causing a denial of service attack on a site.

Results and Conclusion

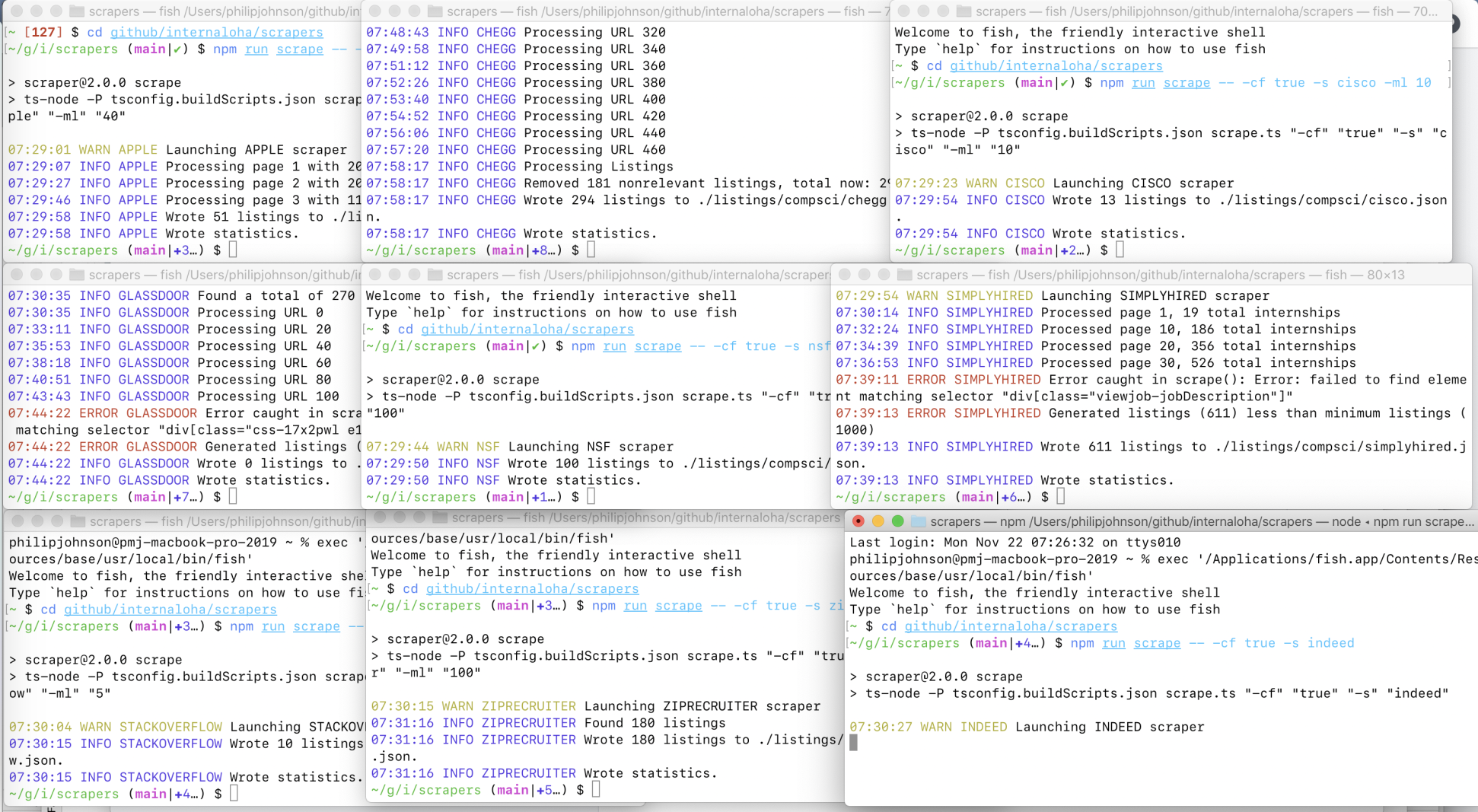

With making the appropriate changes we were able to get shorten the lines of code upto 50% and increase the speed of the scrapers. We were also able to get the scrapers to run on production and we are able to use the listings on the RadGrad Internship explorer page. In this project I learned how to work effectively in a team, time management in handling research with my classes, and learning how scrapers work and how to implement them. In the future we would like to implement more scrapers, have an option to scrape internships in different countries, contacting sites which we are in a legal grey area to scrape, and later having a way where scraping would automatically send scraping data to the RadGrad servers.

Link to essay: ‘InternAloha: Revamping Scrapers’

Youtube video on InternAloha 2.0:

Poster on InternAloha 2.0: